5 IPC and locking

Interprocess communication and how to not shoot yourself in the foot

6 Inter-Process Communication

6.1 Remainder on Process-level parallelization

Main Process

┌─────────────┐

│ │

│ CPU │

├─────────────┤

│ │

│ Memory │

└─┬────┬────┬─┘

│ │ │

│ │ │

┌───────────┘ │ └───────────┐

│ │ │

┌──────▼──────┐ ┌──────▼──────┐ ┌──────▼──────┐

│ │ │ │ │ │

│ CPU │ │ CPU │ │ CPU │

├─────────────┤ ├─────────────┤ ├─────────────┤

│ │ │ │ │ │

│ Memory │ │ Memory │ │ Memory │

└─────────────┘ └─────────────┘ └─────────────┘

Process 1 Process 1 Process 1┌──────────────────────────────────────┐

│ MAIN PROCESS │

│ │

│ │

│ ┌──────────┐ │

│ │ │ │

│ │ CPU │ ┌───────────┐ │

│ │ │ │ │ │

│ └──────────┘ │ Memory │ │

│ │ │ │

│ └───────────┘ │

│ │

│ │

│ │

│ ┌─┐ ┌─┐ ┌─┐ │

│ │┼│ │┼│ │┼│ │

│ │┴│ │┴│ │┴│ │

│ ▼▼▼ ▼▼▼ ▼▼▼ │

│ Thread 1 Thread 2 Thread 3 │

│ ┌─┐ ┌─┐ ┌─┐ │

│ │┼│ │┼│ │┼│ │

│ │┴│ │┴│ │┴│ │

│ ▼▼▼ ▼▼▼ ▼▼▼ │

│ │

└──────────────────────────────────────┘6.2 Inter-process is easy…

- But if my algorithm is not “embarrassingly parallel”, what if we want to share data between processes ?

- let’s go for Shared Memory

6.4 Aside : memory models

There are differents models

6.6 When to use queues?

An algorithm with two computations A and B where :

- B depends on the result of A

- A is independent of B

. . .

A could be a producer for B, and B a consumer for A.

6.7 How to use queues?

┌───────────┐

│ │

│ Producer │

│ │ Process A

│ │

└─────┬─────┘

│

┌────┼───────────────────────────────────────────────────────────────────┐

│ │ Queue │

│ │ ┌─────┬─────┬─────┬─────┬─────┬─────┬─────┐ │

│ │ │ │ │ │ │ │ │ │ │

│ └───────►│ │ │ │ │ │ │ ├──────────┐ │

│ │ │ │ │ │ │ │ │ │ │

│ └─────┴─────┴─────┴─────┴─────┴─────┴─────┘ │ │

│ │ │

│ Shared Memory │ │

└──────────────────────────────────────────────────────────────────┼─────┘

│

▼

┌───────────┐

│ │

Process B │ Consumer │

│ │

│ │

└───────────┘6.8 Producer/consumer, Examples

- A finds primes in a list of number, B formats and prints them every 10 numbers found.

- A fetches a bunch of images on the web, B downloads them and saves them to disk.

- A takes the orders in the restaurant, B cooks them.

. . .

7 More on locking

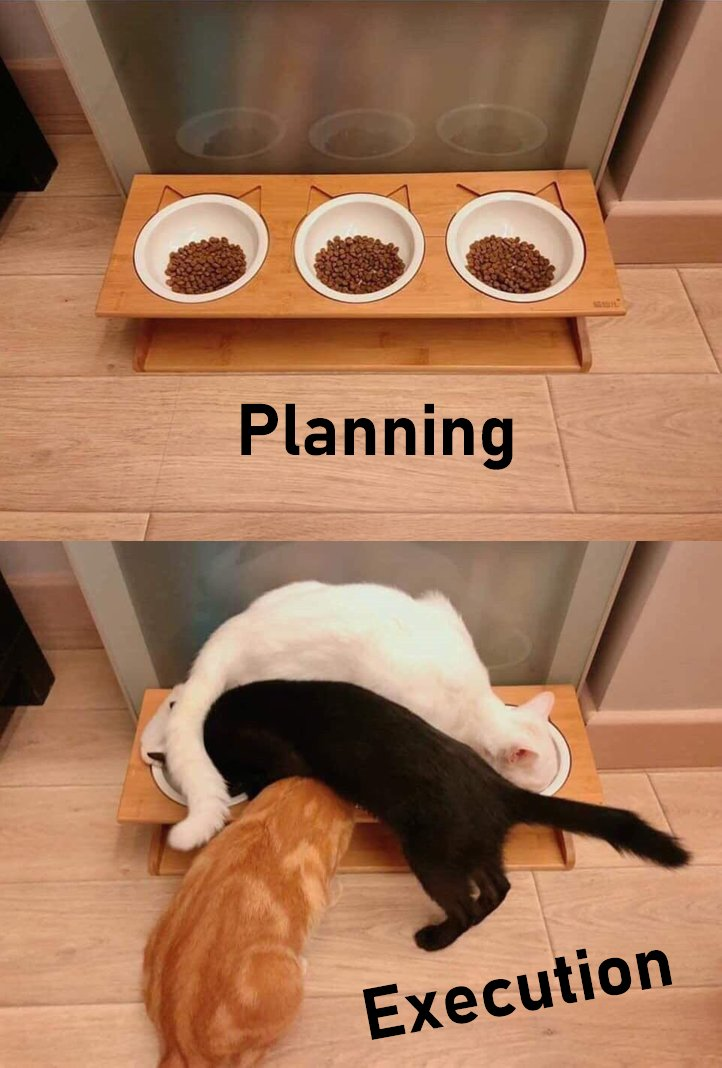

7.1 The main gotcha

what if several processes want to write/read the same shared memory portions at the same time?

. . .

Enter the realm of the dreaded race condition

7.2 Simple example

Printing from several processes a string with 10 times the same char.

from multiprocessing.pool import Pool

from itertools import repeat

# print "AAAAAAAAA", "BBBBBBBBBBB" etc.

def repeat10Cap(c):

print("".join(repeat(chr(c+65),10)))

with Pool(8) as pool:

pool.map(repeat10Cap, range(10))Output:

AAAAAAAAAACCCCCCCCCCBBBBBBBBBBDDDDDDDDDDEEEEEEEEEE

FFFFFFFFFFGGGGGGGGGG

IIIIIIIIII

HHHHHHHHHH

JJJJJJJJJJ

8 The answer : critical section

A critical section is :

- a multiprocessing (and also multithreading) primitive which decorates a portion of code.

- guaranteed to be run by only ONE process at a time.

┌─────────────┐

│ │

│ Normal │

│ Code │ Parallelized

│ │

└──────┬──────┘

│

┌──────▼──────┐

│ │

│ Critical │ Not parallelized

│ Section │

│ │

└──────┬──────┘

│

┌──────▼──────┐

│ │

│ Normal │ Parallelized

│ Code │

│ │

└─────────────┘8.1 Critical section workflow

8.2 A simple implementation in Python : Lock

from multiprocessing.pool import Pool

from multiprocessing import Lock

from itertools import repeat

lock = Lock()

def safe_repeat10Cap(c):

with lock:

# Beginning of critical section

print("".join(repeat(chr(c+65),10)))

# End of critical section

with Pool(8) as pool:

pool.map(safe_repeat10Cap, range(10))Output:

AAAAAAAAAA

BBBBBBBBBB

CCCCCCCCCC

DDDDDDDDDD

EEEEEEEEEE

FFFFFFFFFF

GGGGGGGGGG

HHHHHHHHHH

IIIIIIIIII

JJJJJJJJJJ9 When to use locks ?

- Concurrent access to shared data structures

- Structural consistency not guaranteed.

9.1 Consistency problems with FIFO example I

Process A (resp. B) wants to push x (resp. y) on the list.

9.2 Consistency problems with FIFO example 2

Process A and B both want to pop the list.

9.3 (No) Consistency problems with FIFO example 3

. . .

⚠ ⚠ As long the list is not empty ⚠ ⚠

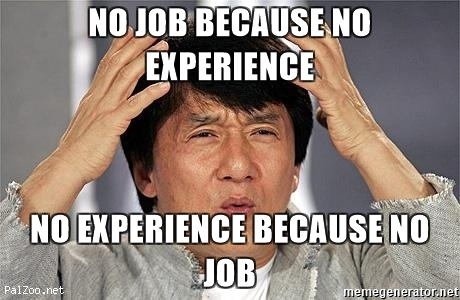

10 Locking, refined

Beware of putting locks everywhere… Beware…

. . .

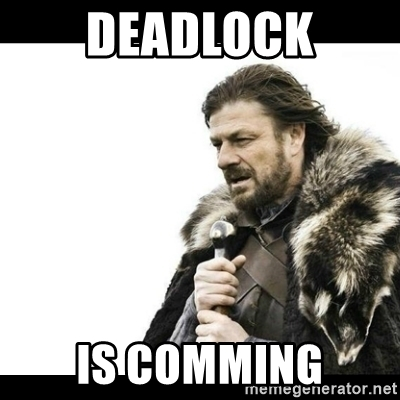

10.1 Deadlock example

10.2 Deadlock (serious) example

Process A acquires lock L1. Process B acquires lock L2. Process A tries to acquire lock L2, but it is already held by B. Process B tries to acquire lock L1, but it is already held by A. Both processes are blocked.

10.3 Avoiding Deadlocks

There is several ways to avoid deadlocks. One of them is the Dijkstra’s Resource Hiearchy Solution.

. . .

In the previous example, processes should try the lowest numbered locks first. Instead of B acquiring L2 first, it should tries to acquire L1 instead and L2 after.

. . .

This solution isn’t universal but is pretty usable in general case.

11 Conclusion

Diving (a little) deeper into parallelism, when computations are NOT independent of each other (no embarrasingly parallel approach), we need a way to decouple processing of data, while still keeping the dependancies intact.

. . .

\Longrightarrow Shared Memory and Queues to the rescue

. . .

With the concurrent use of ressources, there are two pitfalls to be aware of:

- Race Conditions, solution : locking

- Deadlocks, solution : careful and consistent ordering of locks.